使用gpu-monitoring-tools,基于Prometheus Operator及kube-prometheus,来监控运行在Nvidia GPU节点集群上、基于Kubernetes的机器学习平台。

监控方案

- gpu-monitoring-tools(以下简称gmt)的metrics采集包含多套方案:

- NVML Go Bindings(C API)。

- DCGM exporter(Prometheus metrics on DCGM)。

- gmt的监控框架提供了多套方案:

- 直接利用DCGM exporter的Prometheus DaemonSet,只有采集和监控。

- Prometheus Operator + Kube-Prometheus(经Nvidia修改),包含完整的采集、监控、告警、图形化等组件。

我们采用第二套监控框架方案,且这套方案的功能对于没有GPU的CPU机器仍然有效。

经验证,这套方案可以同时监控宿主机硬件(CPU、GPU、内存、磁盘等)、Kubernetes核心组件(apiserver、controller-manager、scheduler等)以及运行在Kubernetes上的业务服务的运行状态。

什么是Operator

- 对于无状态的应用,原生Kubernetes的资源(如Deployment)能很好地支持自动扩缩容、自动重启以及升级。

- 对于有状态的应用,如数据库、缓存、监控系统等,需要根据特定的应用进行不同的运维操作。

- Operator针对特定的应用将运维操作封装进软件,并将Kubernetes API通过第三方资源进行扩展,允许用户创建、配置、管理应用,通常包含一系列Kubernetes CRD的集合。

- 与Kubernetes的Controller和Resource对应关系类似,Operator根据用户提交给Controller的请求,将实际的实例数和实例状态维持在与用户期望相同的效果,但封装了许多细节的操作。

前置准备

镜像

将下列镜像导入到集群的所有节点上:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20# 如果使用原始的kubernetes搭建Prometheus,使用这两个镜像创建资源,但仅能获取metrics,没有对接监控告警

# docker pull nvidia/dcgm-exporter:1.4.6

# docker pull quay.io/prometheus/node-exporter:v0.16.0

# operator基础镜像

docker pull quay.io/coreos/prometheus-operator:v0.17.0

docker pull quay.io/coreos/hyperkube:v1.7.6_coreos.0

# exporters

docker pull nvidia/dcgm-exporter:1.4.3

docker pull quay.io/prometheus/node-exporter:v0.15.2

# prometheus组件

docker pull quay.io/coreos/configmap-reload:v0.0.1

docker pull quay.io/coreos/prometheus-config-reloader:v0.0.3

docker pull gcr.io/google_containers/addon-resizer:1.7

docker pull gcr.io/google_containers/kube-state-metrics:v1.2.0

docker pull quay.io/coreos/grafana-watcher:v0.0.8

docker pull grafana/grafana:5.0.0

docker pull quay.io/prometheus/prometheus:v2.2.1

helm模板

下载并解压下列helm模板:1

2

3

4wget https://nvidia.github.io/gpu-monitoring-tools/helm-charts/kube-prometheus-0.0.43.tgz

tar zxvf kube-prometheus-0.0.43.tgz

wget https://nvidia.github.io/gpu-monitoring-tools/helm-charts/prometheus-operator-0.0.15.tgz

tar zxvf prometheus-operator-0.0.15.tgz

安装步骤

1. 配置

节点标签

对于需要监控的GPU node打上标签。1

kubectl label no <nodename> hardware-type=NVIDIAGPU

外源etcd

对于外源的etcd,即etcd不以容器的方式随Kubernetes集群初始化而启动,而是在事前启动的外部etcd集群,需要指定etcd的集群地址。

假设外源etcd的IP为etcd0,etcd1,etcd2,外部访问端口为2379,使用HTTP直接访问。1

vim kube-prometheus/charts/exporter-kube-etcd/values.yaml

1 | #etcdPort: 4001 |

同时,需在grafana插入图表数据,注意:

- 在465行处添加一个”,”。

- 465行前为

"title": "Crashlooping Control Plane Pods"的面板。 - 在465行处添加以下内容,注意保持缩进。

1

vim kube-prometheus/charts/grafana/dashboards/kubernetes-cluster-status-dashboard.json

1 | { |

暴露端口

暴露prometheus、alertmanager、grafana的访问端口,以备排障。这些端口需要能从开发VPC直接访问。1

vim kube-prometheus/values.yaml

1 | alertmanager: |

1 | vim kube-prometheus/charts/grafana/values.yaml |

1 | service: |

告警接收器

配置告警接收器,通常我们选择在同一个集群内的ControlCenter Service来接收,并将告警信息转换格式后转发给IMS。1

vim kube-prometheus/values.yaml

1 | alertmanager: |

告警规则

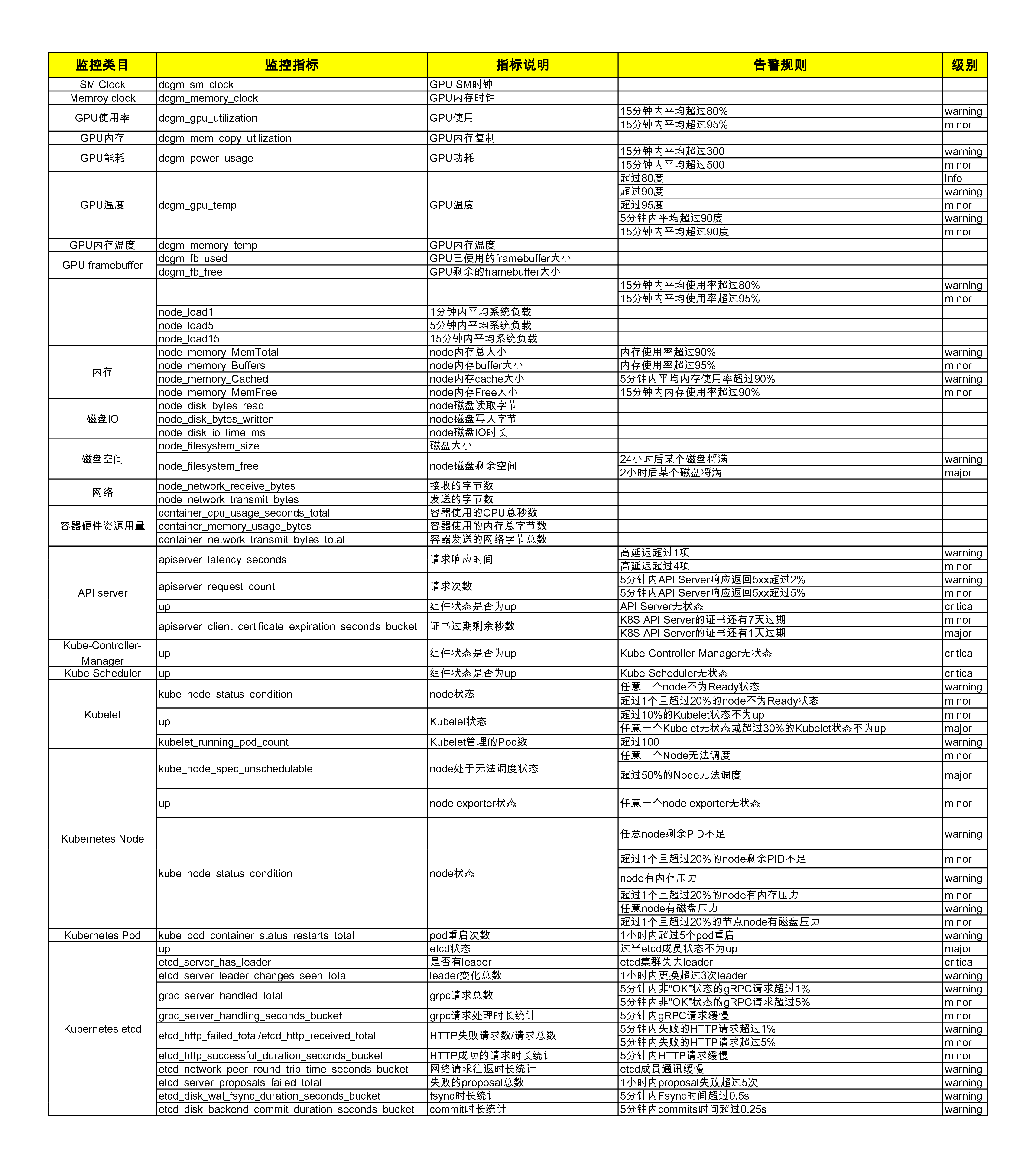

平台监控包括Node硬件(CPU、内存、磁盘、网络、GPU)、K8s组件(Kube-Controller-Manager、Kube-Scheduler、Kubelet、API Server)、K8s应用(Deployment、StatefulSet、Pod)等。

由于篇幅较长,因此将监控告警规则放在附录。

2. 启动

1 | cd prometheus-operator |

1 | cd kube-prometheus |

3. 清理

1 | helm delete --purge kube-prometheus |

1 | helm delete --purge prometheus-operator |

常见问题

无法暴露Kubelet metrics

在1.13.0版本的kubernetes未出现此问题。

- 对于1.13.0之前的版本,需将获取kubelet metrics的方式由https改为http,否则Prometheus的kubelet targets将down掉。[github issue 926]

1

vim kube-prometheus/charts/exporter-kubelets/templates/servicemonitor.yaml

1 | spec: |

- 验证

在Prometheus页面可以看到kubelet target。

无法暴露controller-manager及scheduler的metrics

方法一

针对Kubernetes v1.13.0。

将下述内容添加到kubeadm.conf,并在kubeadm初始化时kubeadm init –config kubeadm.conf。

1

2

3

4

5

6

7

8

9

10apiVersion: kubeadm.k8s.io/v1alpha3

kind: ClusterConfiguration

kubernetesVersion: 1.13.0

networking:

podSubnet: 10.244.0.0/16

controllerManagerExtraArgs:

address: 0.0.0.0

schedulerExtraArgs:

address: 0.0.0.0

...为pod打上label。

1

2

3

4kubectl get po -n kube-system

kubectl -n kube-system label po kube-controller-manager-<nodename> k8s-app=kube-controller-manager

kubectl -n kube-system label po kube-scheduler-<nodename> k8s-app=kube-scheduler

kubectl get po -n kube-system --show-labels验证

在Prometheus页面可以看到kube-controller-manager及kube-scheduler两个target。

在grafana页面可以看到controller-manager及scheduler的状态监控。

方法二

guide

针对1.13.0之前的Kubernetes。

- 修改kubeadm的核心配置。

1

kubeadm config view

将上述输出保存为newConfig.yaml,并添加以下两行:1

2

3

4controllerManagerExtraArgs:

address: 0.0.0.0

schedulerExtraArgs:

address: 0.0.0.0

应用新配置:1

kubeadm config upload from-file --config newConfig.yaml

为pod打上label。

1

2

3

4kubectl get po -n kube-system

kubectl label po kube-controller-manager-<nodename> k8s-app=kube-controller-manager

kubectl label po kube-scheduler-<nodename> k8s-app=kube-scheduler

kubectl get po -n kube-system --show-labels重建exporters。

1

kubectl -n kube-system get svc

可以看到以下两个没有CLUSTER-IP的Service:1

2kube-prometheus-exporter-kube-controller-manager

kube-prometheus-exporter-kube-scheduler

1 | kubectl -n kube-system get svc kube-prometheus-exporter-kube-controller-manager -o yaml |

将上述输出分别保存为newKubeControllerManagerSvc.yaml和newKubeSchedulerSvc.yaml,删除一些非必要信息(如uid、selfLink、resourceVersion、creationTimestamp等)后重建。1

2

3kubectl delete -n kube-system svc kube-prometheus-exporter-kube-controller-manager kube-prometheus-exporter-kube-scheduler

kubectl apply -f newKubeControllerManagerSvc.yaml

kubectl apply -f newKubeSchedulerSvc.yaml

确保Prometheus pod到kube-controller-manager和kube-scheduler的NodePort 10251/10252的访问是通畅的。

验证与方法一相同。

无法暴露coredns

在Kubernetes v1.13.0中,集群DNS组件默认为coredns,因此需修改kube-prometheus的配置,才能监控到DNS服务的状态。

方法一

- 修改配置中的selectorLabel值与coredns的pod标签对应。

1

2

3kubectl -n kube-system get po --show-labels | grep coredns

# 输出

coredns k8s-app=kube-dns

1 | vim kube-prometheus/charts/exporter-coredns/values.yaml |

1 | #selectorLabel: coredns |

重启kube-prometheus。

1

2helm delete --purge kube-prometheus

helm install --name kube-prometheus --namespace monitoring kube-prometheus验证

在Prometheus可以看到kube-dns target。

方法二

修改pod的标签与配置中的一致。

1

kubectl -n kube-system label po

验证与方法一相同。

附录:平台监控告警规则

1

vim charts/exporter-kube-controller-manager/templates/kube-controller-manager.rules.yaml

1 | {{ define "kube-controller-manager.rules.yaml.tpl" }} |

1 | vim charts/exporter-kube-etcd/templates/etcd3.rules.yaml |

1 | {{ define "etcd3.rules.yaml.tpl" }} |

1 | vim charts/exporter-kubelets/templates/kubelet.rules.yaml |

1 | {{ define "kubelet.rules.yaml.tpl" }} |

1 | vim charts/exporter-kubernetes/templates/kubernetes.rules.yaml |

1 | {{ define "kubernetes.rules.yaml.tpl" }} |

1 | vim charts/exporter-kube-scheduler/templates/kube-scheduler.rules.yaml |

1 | {{ define "kube-scheduler.rules.yaml.tpl" }} |

1 | vim charts/exporter-kube-state/templates/kube-state-metrics.rules.yaml |

1 | {{ define "kube-state-metrics.rules.yaml.tpl" }} |

1 | vim charts/exporter-node/templates/node.rules.yaml |

1 | {{ define "node.rules.yaml.tpl" }} |